Welcome to neuralSPOT

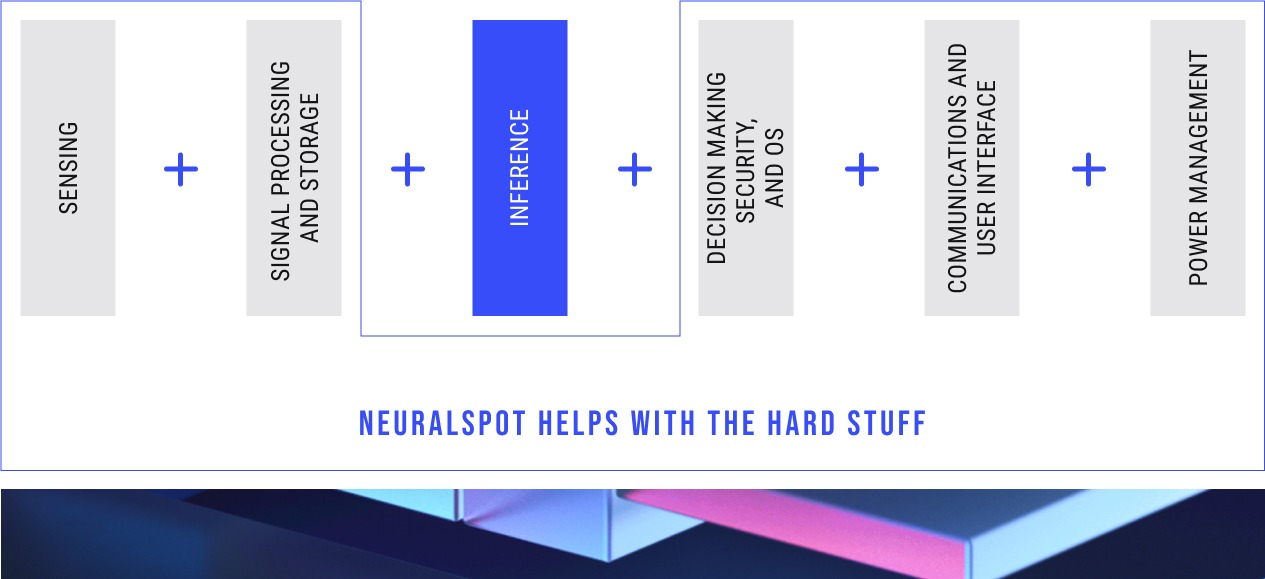

neuralSPOT is an AI-focused ADK (AI Development Kit) and toolkit which makes using Apollo4's hardware capabilities easier than ever. neuralSPOT takes care of embedded platform details, letting your data scientists focus on AI.

Because AI is hard enough.

neuralSPOT is an AI developer-focused ADK (AI Development Kit) in the true sense of the word: it includes everything you need to get your AI model onto Ambiq’s platform. You’ll find libraries for talking to sensors, managing SoC peripherals, and controlling power and memory configurations, along with tools for easily debugging your model from your laptop or PC, and examples that tie it all together.

Major Components

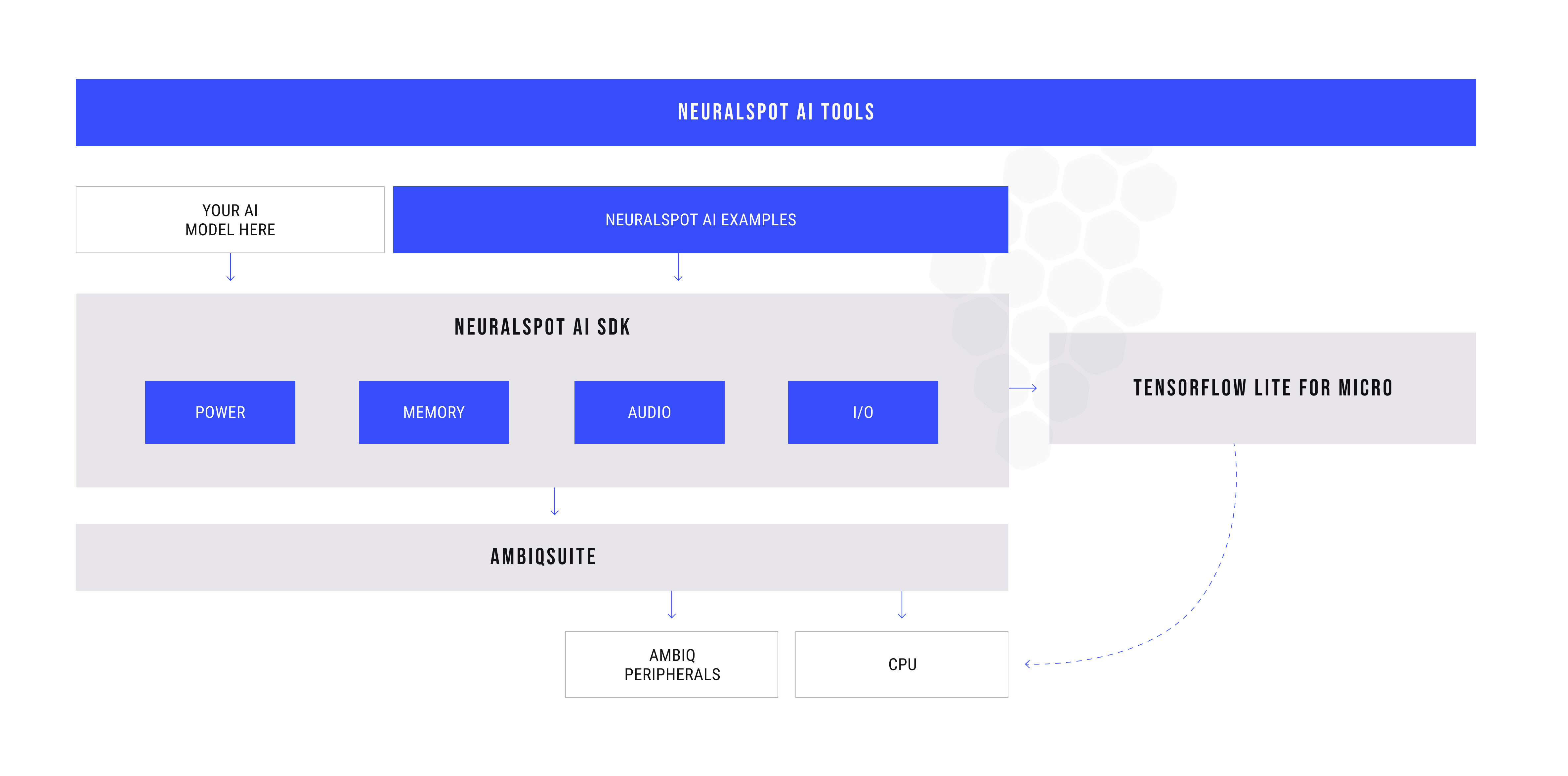

neuralSPOT includes tools, libraries, and examples to help get your AI features implemented, optimized, and deployed.

Libraries

neuralSPOT contains Ambiq-specific embedded libraries for audio, i2c, and USB peripherals, power management, and numerous helper functions such as interprocess communications, and memory management.

Tools

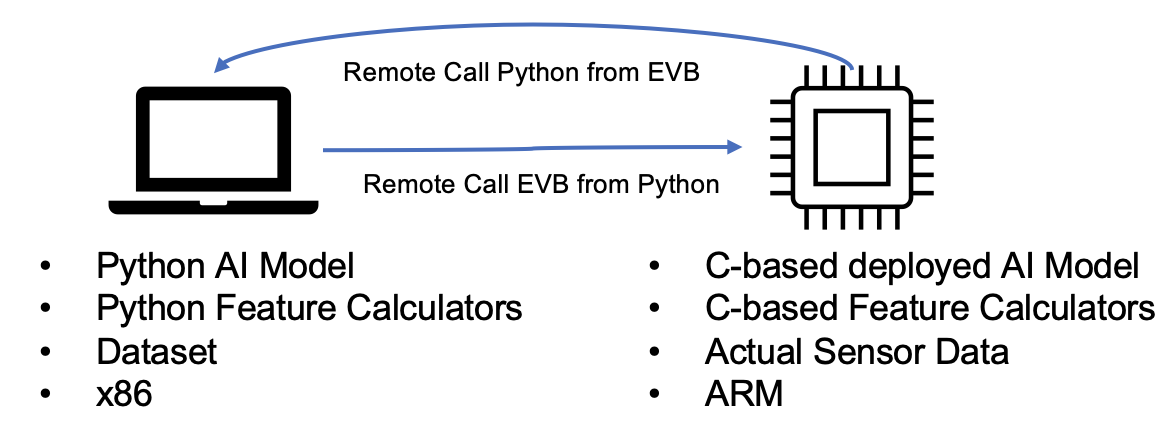

neuralSPOT offers remote procedure call support to ease cross-platform development. This enables scenarios such as running inference on a laptop using data collected on the EVB, or conversely, feeding data to a model deployed on the EVB. For example, neuralSPOT's AutoDeploy Tool uses RPC to automatically deploy, test, and profile TFLite models on Ambiq devices.

Examples

neuralSPOT includes real-world AI examples demonstrating the use of its features, and sample drivers for external peripherals. Beyond that, Ambiq’s ModelZoo is built on NeuralSPOT, giving developers powerful templates to start building AI models from.

Intended Use

There is no one way to develop and deploy AI, so NeuralSPOT makes as few assumptions as possible. There are three principal ways in which it is intended to be used:

- Develop the deployed model as a standalone application, to be integrated after it is working and optimized.

- Develop the model source code as a component in an existing application.

- Export a linkable, minimal self-contained static library ready to integrate into an existing application.

In order to support these scenarios, NeuralSPOT includes everything needed to get your model running, including curated versions of AmbiqSuite and Tensorflow Lite for Microcontrollers.

The Nest

The Nest is an automatically created directory with everything you need to get TF and AmbiqSuite running together and ready to start developing AI features for your application. It is created for your specific target device and only includes needed header files and static libraries, along with a basic example application stub with a main(). It is designed be be used in either of the two ways listed above - see our Nest guide for more details.

Navigating the NeuralSPOT Repository

NeuralSPOT lives on GitHub as an open-source, highly collaborative project which includes code, documentation, licenses, collaboration guides - just about everything needed to get started.

- The main repo is a useful place to get started, including the documentation section.

- Our development flow is based on Nests, described here.

- The examples documents how to integrate TF Lite for Microcontrollers, how to set up and use our debug tools (and here), and how to instantiate our various drivers.

- The neuralSPOT libraries each have detailed documentation for how to use them.

- For those interested in contributing to neuralSPOT or adapting it to their projects, we have a Developers Guide.

- Finally, the code is documented.

Using neuralSPOT

A Day in the Life of an AI Developer

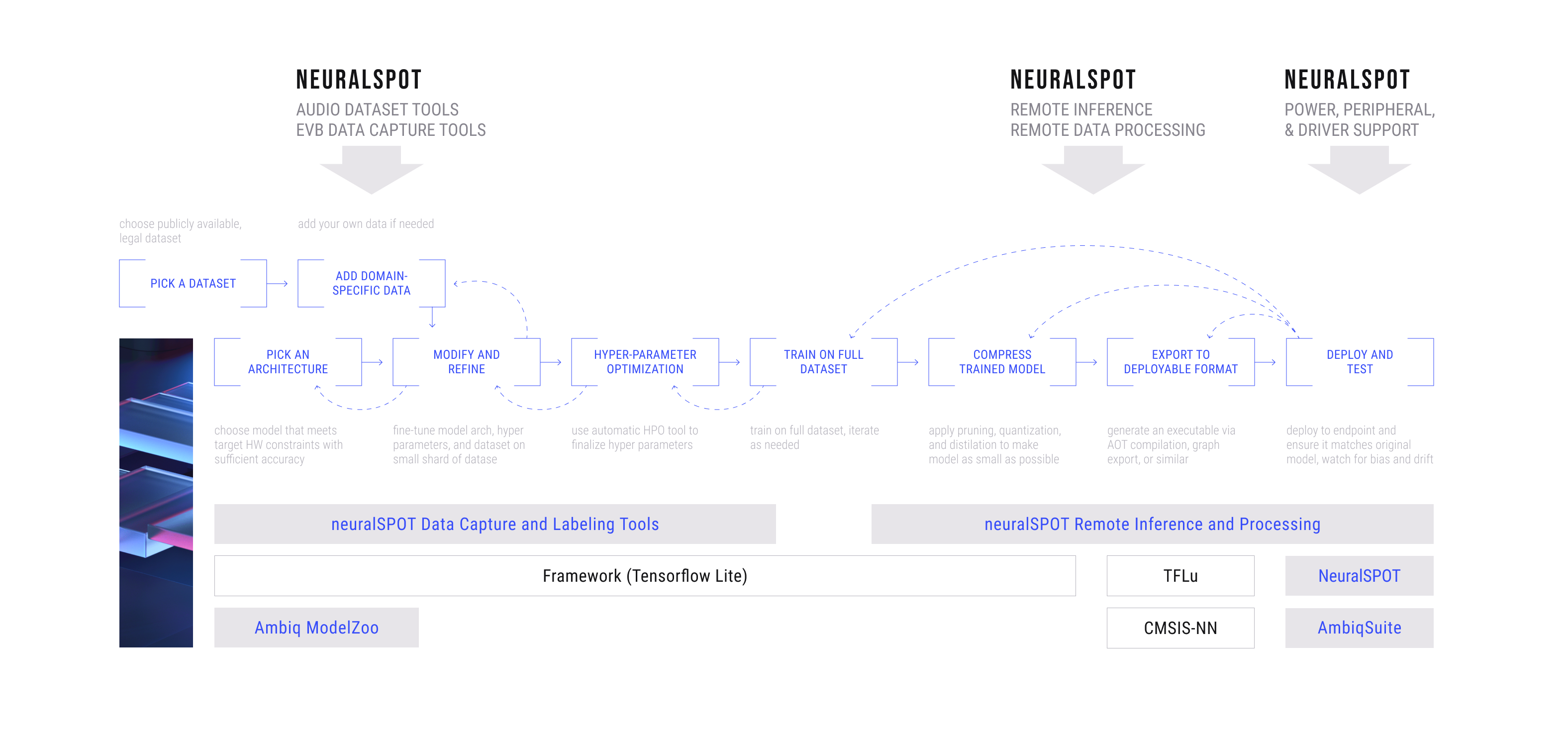

AI features are rarely developed by a single developer. It takes a team of AI specialists, data specialist, and embedded application specialists working together to develop and deploy an accurate and robust AI feature. These roles are fuzzy and frequently overlap, but for purposes of this document lets define them as follows:

- The Data Specialist collects and curates datasets, and augments the data with appropriate noise and distortion. They may also be responsible for labeling, updating, merging, and visualizing datasets.

- The AI Specialist crafts an AI architecture in order to train a model to calculate the desired output (aka inference) based on input data. This model is usually written in a python-based framework such as PyTorch or Tensorflow. This developer is usually in charge of compressing the model using techniques such as quantization, pruning and distillation.

- The Application Specialist is in charge of instantiating the AI model, hooking it up to the platform’s sensor, invoking it, and interpreting the results. Model’s are typically instantiated using one of a handful of runtimes such as Tensorflow Lite for Microcontrollers, but can also be written ‘by hand’, so to speak.

neuralSPOT is intended to help each of these developers.

neuralSPOT for Data Specialists

Data Specialists can leverage neuralSPOT to ease collection of data from real sensors onto a remote device such as a PC, and to fine-tune sensor settings to get the data into the shape the AI specialist needs. They can also use it to test new datasets against deployed models.

See our MPU Data Capture and Audio Data Capture examples for a guide on how this can be done.

neuralSPOT for AI Specialists

AI Specialists frequently run into mismatches between a model’s behavior on a PC and on the embedded device - these mismatches arise from numeric differences (Python almost never matches C, and even when exclusively in the C domain, CPU architectures differ in how they treat certain number formats), algorithmic differences, and more. The AI specialist can leverage NeuralSPOT to compare the PC and EVB feature extraction, inference, and output analysis portions of their model to eliminate the need for exact matches. For example, an AI specialist might choose to use the embedded platform’s feature extraction code to feed the PC-sided inference model implementation.

See our Autodeploy tool for one way to use neuralSPOT's RPC utilities to accomplish this.

neuralSPOT for Application Specialists

Application Specialists can use NeuralSPOT as a fast way to integrate an AI model into their application. They’ll typically leverage the ready-made Tensorflow Lite for Microcontroller examples, use the SDK’s audio and i2c drivers to rapidly integrate their data sources, and optimize for power, performance, and size after it all works.

See Developing using neuralSPOT for a discussion of how various workflows are supported. For developers that don't need neuralSPOT's helpers and libraries, a minimal static library including nothing but the model and runtime can be generated using the AutoDeploy Tool.